Learning to add with LSTM

I know, Wolfram’s stuff ain’t the most popular when it comes to machine learning and AI. What pleases in their sandbox is the ease with which one can experiment with ideas without having to go very deep into various frameworks.

Their neural network API is based on MXNet and is straightforward to use. Below, for example, is a handful of lines of code through which addition is learned. Can’t beat the fun here.

First you need a bit of data of course. Just a matter of assembling a dictionary with simple additions and their result.

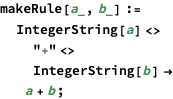

This rule takes two integers and returns a rule corresponding to the addition:

With this, one can compile a dataset consisting of all combinations from zero to hundred:

Out[3]//InputForm=

{"30+90" -> 120, "97+15" -> 112, "34+29" -> 63, "91+26" -> 117}

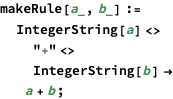

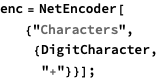

A neural network does not understand strings so one has to convert things to a tensor. In this case a vector corresponding to the characters in the input string:

For instance the following addition is converted to a vector

![]()

![]()

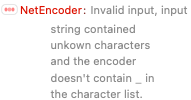

This encoder will fail if applied to something it wasn’t made for:

![]()

![]()

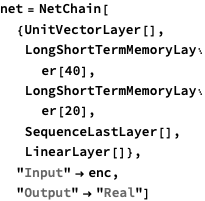

Finally, the actual neural network is assembled using a couple of LSTM layers. You are free to take anything you like, composing neural networks are a bit of an art rather than a science. A LSTM layer has an internal memory across the input axis, which makes sense since the output of an addition is directly related to previous input (unlike, say, poems or music):

![]()

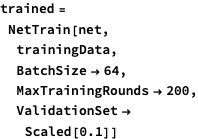

The training is also straightforward and we use 10% of the data for validation:

Now you can give any addition with numbers below hundred:

![]()

![]()

Simple, powerful and enticing at the same time.